In computational linguistics, repeated segments in a text can be derived and named in various ways. The notion of n-gram is very popular but retains a mathematical flavor that is ill-suited to hermeneutical efforts.

On the other hand, corpus linguistics uses many definitions for multi-words expressions, each one suited to a specific field of linguistics.

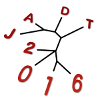

The present paper contributes to the debate on term extraction, a particular notion regarding multiword-expressions that is widely used in summarization of scientific texts. We compare and contrast two methods of term extraction: distance-based maps vs. graph-based maps. The use of network theory for the automated analysis of texts is here expanded to include the concept of community around newly identified keywords.