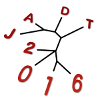

The aim of this paper is to investigate the possibility to develop a set of textual-data based indicators in order to use textual material such as interviews, national strategic plans and other official documents to evaluate the social impact of development projects. An extensive discussion has been set up about the possibility to define and measure social impact and its main output, Social Capital. It is true that also if there is a definition of Social Capital that is now generally accepted and used, yet there is not a universally accepted monitoring and evaluation framework. In addition, using textual data to measure the social impact of a policy - without limiting the analysis just to health policies - could appear ambitious and unusual. However, this hypothesis is also quite intriguing, considering the amount of textual data that are available and the recent development of text mining tools and techniques. One of the most difficult part in monitoring and evaluation of social impact is data collection and elaboration. In this perspective, the possibility to use some textual data from formerly available documents such as interviews, official documents and reports in order to evaluate social impact could be very interesting not only for researchers but also for public sector and ngos' officers. We decide to test our hypothesis firstly on a small corpus, that could be analysed using both qualitative and quantitative methods. There are not universally-accepted proxies for Social Capital presence and for this reason we choose to classify manually our interviews in order to check our method reliability. Our case study is StopTB Partnership Initiative, implemented by World Health Organization (WHO) from 2010 to 2014. Our corpus includes 13 interviews referring to the Stop TB Partnership experience. The whole corpus mirrors a vocabulary of V = 2 324 different words and includes N= 22 221 occurrences. The Type-Token Ratio (V/N) amounts to 10,459% (\20%), and the percentage of words with only one occurrence (hapax legomena) is 41,179% (\50 %). The corpus was pre-processed by means of the Taltac2 dedicated software (Bolasco et al., 2010) and Wordstat (Provalis Research), textual data were processed by Wordstat and Iramuteq (Ratinaud 2009; Retinaud and De´jean 2009). We built on this analysis to identify such “partnering” components among our corpus that is composed by interviews made to medical officer from different countries in the world. We were aware about lack of homogeneity of our corpus but part of our aim was developing a tool that can be used also on already available data, collected for other aims or extracted from existing data-set. Considering this lacking homogeneity, beside most frequent and most relevant words we analysed also common content words. Considering also the complexity of the concepts we are looking for, we find that analysing simple words frequency, weighted frequency and distribution could not be enough. For this reason, we pre-processed our corpus using Taltac2, in order to detect some meaningful units formed by two or more words, that could be useful to recognize partnering and Social Capital components.